If we truly wish to move toward a healthcare system which delivers high quality in a reliable manner, one of the great flaws of our current system is that incentives are not always lined up to achieve that goal. Indeed, we could make a strong argument that incentives, particularly financial incentives, often lead healthcare providers (sometimes individuals, but certainly large organizations such as hospitals, nursing home and hospital chains, pharmaceutical companies, device manufacturers) in the wrong direction. That is, they pursue financial profitability rather than the highest quality of care for our people.

Sometimes these two run together, and sometimes they do not. If the disease you have is one that is well-provided for and you have the money or insurance to pay for it, you are in luck. If you don’t have financial access to care, or your disease’s “product line” was not one deemed financially profitable enough for your hospital or health system to invest in, you are not. Similarly, if you have a disease that lots of others share, pharma is always ready to provide a drug for it – particularly if it is still patented; if you have an “orphan” disease, you may not be able to get treatment, or it can cost (truly) more than $100,000 a year.

The federal government, through Medicare, has sought to use financial incentives to (most often) control costs and (sometimes) to encourage quality; this occurs under both Republican and Democratic administrations, but is also a big feature of the Affordable Care Act (ACA). One example going back to the 1980s, is the reimbursement of hospitals for what Medicare has figured is the appropriate cost of care for a particular set of diagnoses, rather than by whatever the hospital charges. Under ACA and in incentive plans in place from private insurers, doctors get more money if they do more of the “right” things and fewer of the “wrong” things. Financial incentives can also be used by organizations to encourage certain types of performance in its employees or contractors. Examples include incentive payments for generating more revenue, or financial penalties written into a contract for poor performance. Financial incentives are not unique to health care and, in fact, have been used and studied in many other industries. Their use in health care is not unique to the US.The question is, however, “do they work?”

This is the question that a group of Australian scholars led by Paul Glasziou sought to answer in an “Analysis” published in the British Medical Journal (subscription required), “When financial incentives do more good than harm: a checklist.”[1] Glasziou and his colleagues review the data on the effectiveness of financial incentives in both health care and other industries, and focus upon a meta-analysis by Jenkins et al.[2], and two Cochrane studies, one an analysis of 4 systematic reviews (? a meta-meta analysis?)[3] by Flodgren et al., and one looking at primary care by Scott, et al.[4] Basically, the results were mixed; sometimes they worked (to achieve the desired ends) and sometimes they didn’t. Glasziou observes: “While incentives for individuals have been extensively examined, group rewards are less well understood….Finally, and most crucially, most studies gathered few data on potential unintended consequences, such as attention shift, gaming, and loss of motivation.” (Again, see Daniel Pink, “Drive”, on Motivation).

In an effort to help identify what works, Glasziou has developed a 9-item “checklist” for financial incentives in healthcare that is the centerpiece of this article. Six items are related to the question “Is there a remediable problem in routine clinical care?”, and 3 are related to Design and Implementation. The first six are:

1. Does the desired clinical action improve patient outcomes?

2. Will undesirable clinical behavior persist without intervention?

3. Are there valid, reliable, and practical measures of the desired clinical behavior?

4. Have the barriers and enablers to improving clinical behavior been assessed?

5. Will financial incentives work, and better than other interventions to change behavior, and

why?

6. Will benefits clearly outweigh any unintended harmful effects, and at an acceptable cost?

And the 3 regarding implementation are:

7. Are systems and structures needed for the change in place?

8. How much should be paid, to whom, and for how long?

9. How will the financial incentives be delivered?

They provide explanations of each of these and include a useful table that uses real life positive and negative examples to illustrate their points. For example, regarding #1 they note that the UK has provided financial incentives to get the glycated hemoglobin level in people with type 2 diabetes below 7%, despite several studies showing no patient benefit. (This is an example of “expert opinion” governing practice ever after contradicted by good research.) #2 means some behaviors occur or extinguish if effective processes are put in place without financial incentives. #3 is important because of the cost of implementation (“We found no studies on the cost of collecting clinical indicators.”); one of the great complaints of providers is that they spend so much time providing information to various oversight bodies that they haven’t sufficient time to provide good patient care.

Criterion #5 relates to the issue of “what, in fact, motivates people?” Criterion #6 is, I believe, relates to the greatest flaws in most of our financial incentive (often called “pay for performance” systems. The four behaviors most often creating harmful effects have all been discussed in this blog:

Attention shift (focusing on the area being rewarded distracts from attention to other areas);

Gaming (a huge negative especially for large organizations). This specifically refers to manipulating data to look “good” on the measurement, but also includes upcoding and what might be called intentional attention shift, where the organization focuses, on purpose, on to the areas that make it the most money and neglects others;

Harm to the patient clinician relationship, when the patient, often correctly, feels that it is not her/his benefit but some external target that is motivating providers;

Reduction in equity. This is extremely important. I have written extensively about health disparities; this point is meant to drive home the reality that this inequities, or disparities, can persist even when there is an overall improvement in the areas being measured.

Most of these issues and several others derive from the simplistic application of financial rewards to complex interdependent systems. Financial incentives assume that paying more for a service will

lead to better quality or additional capacity, or both. However, because money is only one of many internal and external influences on clinical behavior, many factors will moderate the size and direction of any response. The evidence on whether financial incentives are more effective than other interventions is often weak and poorly reported.”

These authors are from Australia, which like most developed countries, has a national health insurance system. (See the map[5]). The data they cite is world-wide, but largely from their country, the UK (which also has a national health system) and the US (which does not). The real problems of health disparities and inequity are enormous in our country. They are not modified by the presence of a national health system, which reduces many of the financial barriers to health care; indeed they are exacerbated by a make-money, business-success psychology of providers that may be worse in the for-profit sector but essentially drives the non-profit sector as well.

These authors are from Australia, which like most developed countries, has a national health insurance system. (See the map[5]). The data they cite is world-wide, but largely from their country, the UK (which also has a national health system) and the US (which does not). The real problems of health disparities and inequity are enormous in our country. They are not modified by the presence of a national health system, which reduces many of the financial barriers to health care; indeed they are exacerbated by a make-money, business-success psychology of providers that may be worse in the for-profit sector but essentially drives the non-profit sector as well.

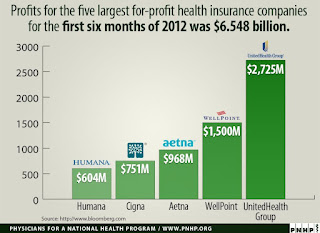

The application of a simplistic corporate psychology to health care delivery can lead to poorer quality and greater inequity in any country. In combination with an entire system built on making money, gaming the system, and excluding the poor, and making corporate profit (see graph) it is a disaster. Our disaster.

[1] Glasziou P, et al., When financial incentives do more good than harm: a checklist, BMJ 2012;345:e5047 doi 10,1136/bmj.e5037, published August 20, 2012

[2] Jenkins GD, Mitra A, Gupta N, Shaw JD. Are financial incentives related to performance? A meta-analytic review of empirical research. J Appl Psychol 1998;83:777-87.

[3] Flodgren G, Eccles MP, Shepperd S, Scott A, Parmelli E, Beyer FR. An overview of reviews evaluating the effectiveness of financial incentives in changing healthcare professional behaviours and patient outcomes. Cochrane Database Syst Rev 2011;7:CD009255.

[4] Scott A, Sivey P, Ait Ouakrim D, Willenberg L, Naccarella L, Furler J, et al. The effect of financial incentives on the quality of health care provided by primary care physicians. Cochrane Database Syst Rev 2011;9:CD008451.

[5] Interestingly, this map, from the Atlantic, may make us think that the aloneness of the US in not having national health care is less serious than it is. Most adults are used to seeing map projections that inflate the size of Europe and North America. This is a geographically more accurate map, but if it were in our “accustomed” projections would be even more green.

No comments:

Post a Comment